At the House Judiciary Committee's hearing yesterday to examine and discuss white nationalism and hate crimes, Google admitted to manipulating search results based on subjective factors if content was controversial, i.e., "on the border." Only Rep. Tom McClintock of California pushed back hard, saying the power to decide what speech is acceptable and what is not can be dangerous.

I've personally had an innocuous comment I tried posting on Instagram blocked before I was able to post it, showing the reach of AI. The reason given in the pop-up box was that I was "bullying" an Iowa wrestling coach and former Olympic contender, but no reasonable human being would classify my criticism as bullying. As Rep. McClintock pointed out, "bullying" can be pretext to censor legitimate criticism, and in doing so, prevent progress and transparency. On a more basic level, allowing corporations power over acceptable speech could also become a way to extract an "advertising tax" on individuals and businesses to resolve an image issue if they are caught in an algorithm's web. Such a dynamic feeds monopolies (and more difficult anti-trust enforcement) by favoring large over small businesses.

People in positions of power ought to be scrutinized fairly and on all the facts. An unaccountable entity--whether corporate or government--picking and choosing which facts to include or exclude enables poor leadership. Worse, it prevents local leaders and voters from properly utilizing their powers, causing a loss of credibility on all sides and potential backlash (e.g., President Trump). By the way, that Iowa wrestling coach I tried posting a comment about? Almost no one knows he was accused of sexual assault, leading to an interesting (and public) court battle (Brands v. Sheldon Community School, 671 F. Supp. 627 (N.D. Iowa 1987)).

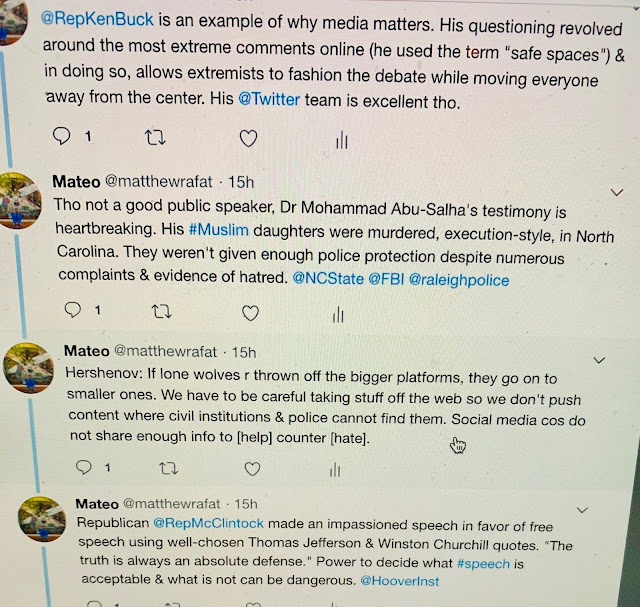

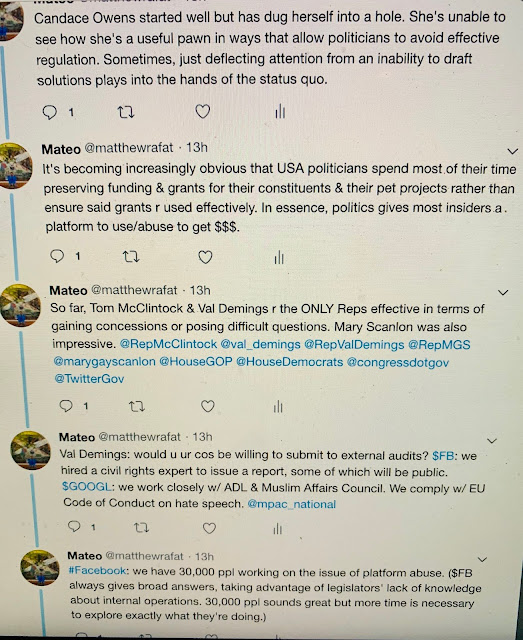

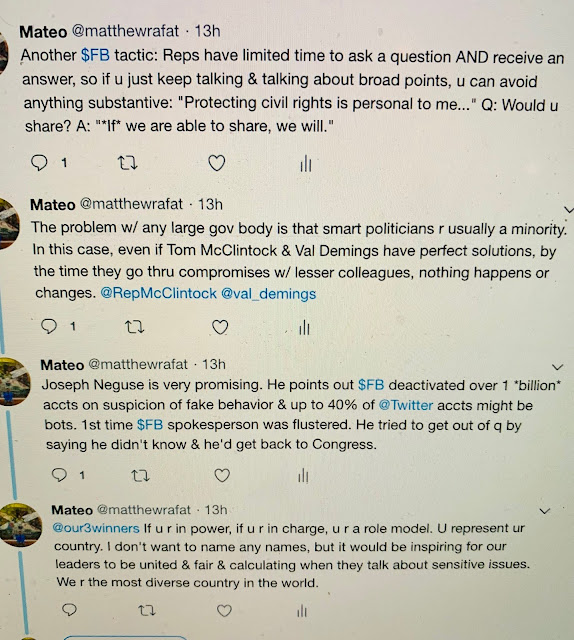

My Twitter recap of the hearing is below:

Two other issues arose: 1) many states lack appropriate hate crime laws, so the federal government should consider establishing a better minimum baseline; and 2) both state and federal laws do little to nothing to address widespread "doxxing" problems, i.e., revelation of private contact information specifically for purposes of harassment.

I've personally had an innocuous comment I tried posting on Instagram blocked before I was able to post it, showing the reach of AI. The reason given in the pop-up box was that I was "bullying" an Iowa wrestling coach and former Olympic contender, but no reasonable human being would classify my criticism as bullying. As Rep. McClintock pointed out, "bullying" can be pretext to censor legitimate criticism, and in doing so, prevent progress and transparency. On a more basic level, allowing corporations power over acceptable speech could also become a way to extract an "advertising tax" on individuals and businesses to resolve an image issue if they are caught in an algorithm's web. Such a dynamic feeds monopolies (and more difficult anti-trust enforcement) by favoring large over small businesses.

People in positions of power ought to be scrutinized fairly and on all the facts. An unaccountable entity--whether corporate or government--picking and choosing which facts to include or exclude enables poor leadership. Worse, it prevents local leaders and voters from properly utilizing their powers, causing a loss of credibility on all sides and potential backlash (e.g., President Trump). By the way, that Iowa wrestling coach I tried posting a comment about? Almost no one knows he was accused of sexual assault, leading to an interesting (and public) court battle (Brands v. Sheldon Community School, 671 F. Supp. 627 (N.D. Iowa 1987)).

My Twitter recap of the hearing is below:

Two other issues arose: 1) many states lack appropriate hate crime laws, so the federal government should consider establishing a better minimum baseline; and 2) both state and federal laws do little to nothing to address widespread "doxxing" problems, i.e., revelation of private contact information specifically for purposes of harassment.